View on TensorFlow.org View on TensorFlow.org

|

Run in Google Colab Run in Google Colab

|

View source on GitHub View source on GitHub

|

Download notebook Download notebook

|

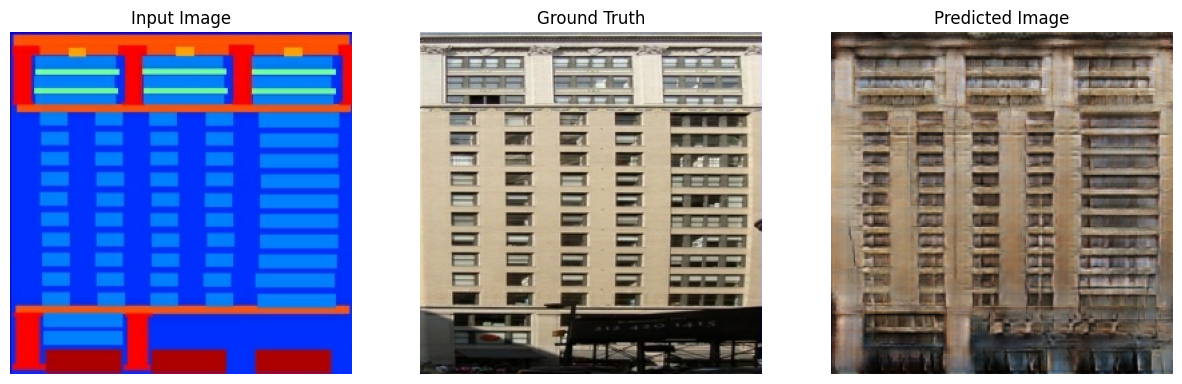

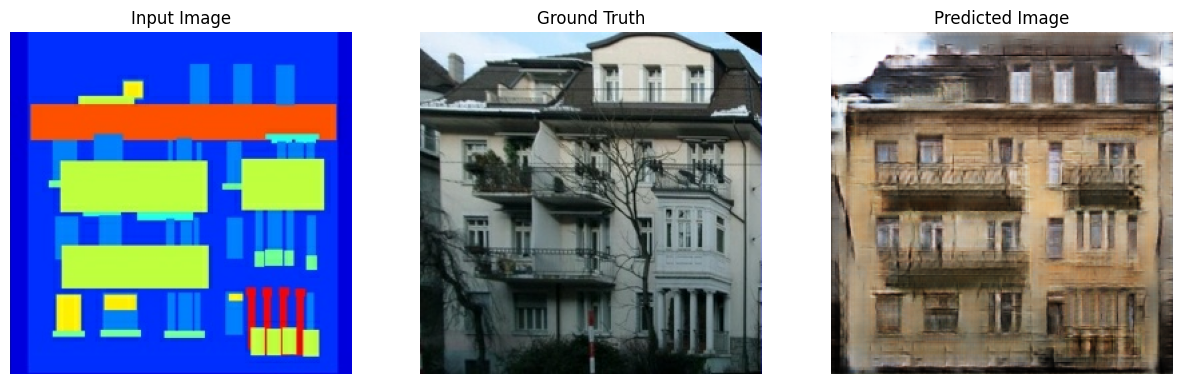

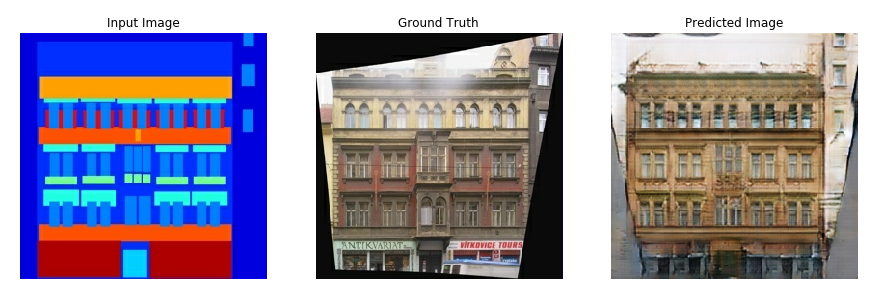

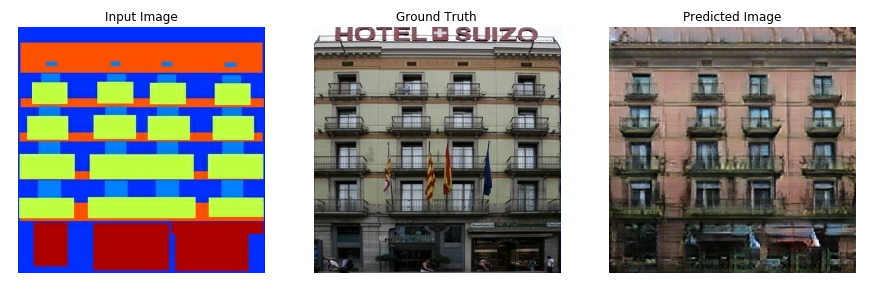

This tutorial demonstrates how to build and train a conditional generative adversarial network (cGAN) called pix2pix that learns a mapping from input images to output images, as described in Image-to-image translation with conditional adversarial networks by Isola et al. (2017). pix2pix is not application specific—it can be applied to a wide range of tasks, including synthesizing photos from label maps, generating colorized photos from black and white images, turning Google Maps photos into aerial images, and even transforming sketches into photos.

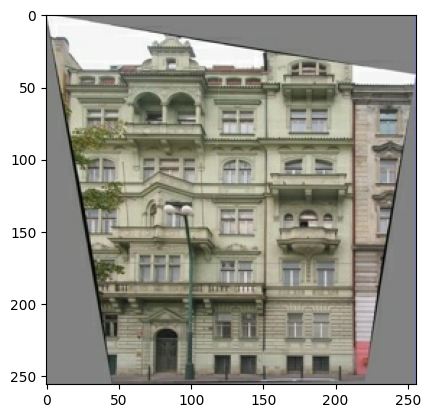

In this example, your network will generate images of building facades using the CMP Facade Database provided by the Center for Machine Perception at the Czech Technical University in Prague. To keep it short, you will use a preprocessed copy of this dataset created by the pix2pix authors.

In the pix2pix cGAN, you condition on input images and generate corresponding output images. cGANs were first proposed in Conditional Generative Adversarial Nets (Mirza and Osindero, 2014)

The architecture of your network will contain:

- A generator with a U-Net-based architecture.

- A discriminator represented by a convolutional PatchGAN classifier (proposed in the pix2pix paper).

Note that each epoch can take around 15 seconds on a single V100 GPU.

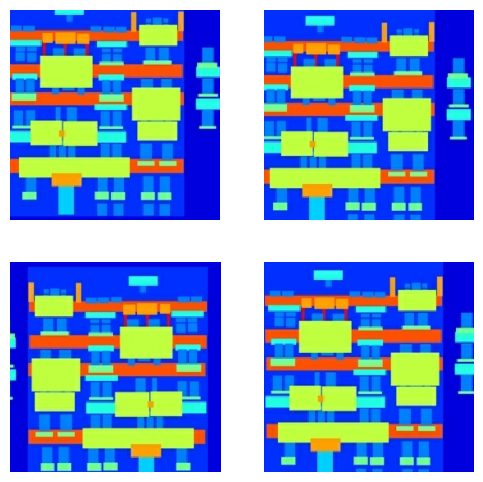

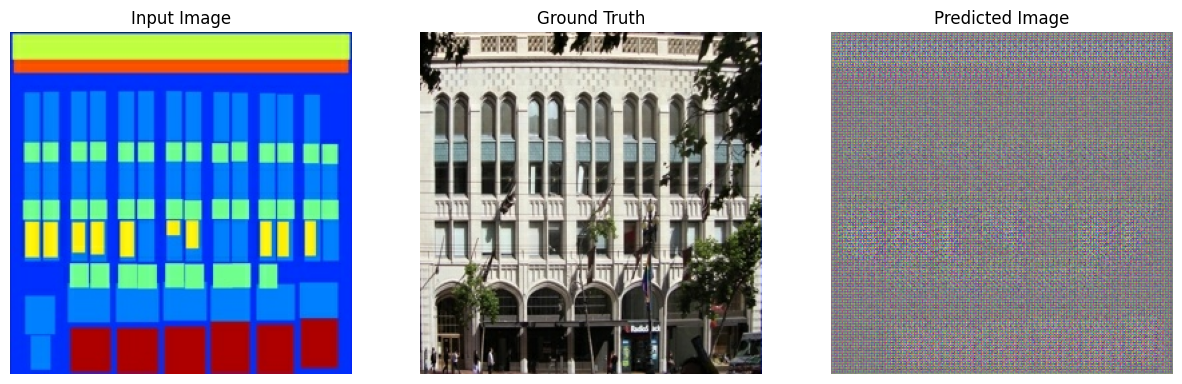

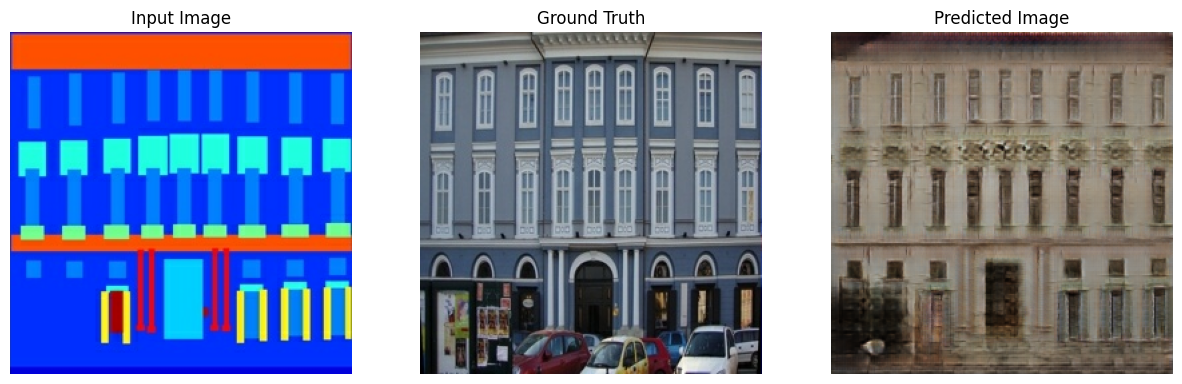

Below are some examples of the output generated by the pix2pix cGAN after training for 200 epochs on the facades dataset (80k steps).

Import TensorFlow and other libraries

import tensorflow as tf

import os

import pathlib

import time

import datetime

from matplotlib import pyplot as plt

from IPython import display

2024-07-19 03:31:24.125490: E external/local_xla/xla/stream_executor/cuda/cuda_fft.cc:485] Unable to register cuFFT factory: Attempting to register factory for plugin cuFFT when one has already been registered 2024-07-19 03:31:24.146445: E external/local_xla/xla/stream_executor/cuda/cuda_dnn.cc:8454] Unable to register cuDNN factory: Attempting to register factory for plugin cuDNN when one has already been registered 2024-07-19 03:31:24.152865: E external/local_xla/xla/stream_executor/cuda/cuda_blas.cc:1452] Unable to register cuBLAS factory: Attempting to register factory for plugin cuBLAS when one has already been registered

Load the dataset

Download the CMP Facade Database data (30MB). Additional datasets are available in the same format here. In Colab you can select other datasets from the drop-down menu. Note that some of the other datasets are significantly larger (edges2handbags is 8GB in size).

dataset_name = "facades"

_URL = f'http://efrosgans.eecs.berkeley.edu/pix2pix/datasets/{dataset_name}.tar.gz'

path_to_zip = tf.keras.utils.get_file(

fname=f"{dataset_name}.tar.gz",

origin=_URL,

extract=True)

path_to_zip = pathlib.Path(path_to_zip)

PATH = path_to_zip.parent/dataset_name

Downloading data from http://efrosgans.eecs.berkeley.edu/pix2pix/datasets/facades.tar.gz 30168306/30168306 ━━━━━━━━━━━━━━━━━━━━ 9s 0us/step

list(PATH.parent.iterdir())

[PosixPath('/home/kbuilder/.keras/datasets/fashion-mnist'),

PosixPath('/home/kbuilder/.keras/datasets/mnist.npz'),

PosixPath('/home/kbuilder/.keras/datasets/facades.tar.gz'),

PosixPath('/home/kbuilder/.keras/datasets/facades'),

PosixPath('/home/kbuilder/.keras/datasets/kandinsky5.jpg'),

PosixPath('/home/kbuilder/.keras/datasets/YellowLabradorLooking_new.jpg')]

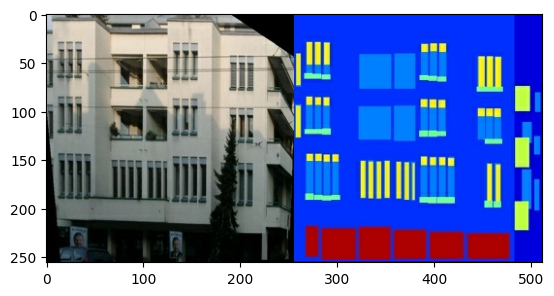

Each original image is of size 256 x 512 containing two 256 x 256 images:

sample_image = tf.io.read_file(str(PATH / 'train/1.jpg'))

sample_image = tf.io.decode_jpeg(sample_image)

print(sample_image.shape)

(256, 512, 3) WARNING: All log messages before absl::InitializeLog() is called are written to STDERR I0000 00:00:1721359896.204955 50782 cuda_executor.cc:1015] successful NUMA node read from SysFS had negative value (-1), but there must be at least one NUMA node, so returning NUMA node zero. See more at https://github.com/torvalds/linux/blob/v6.0/Documentation/ABI/testing/sysfs-bus-pci#L344-L355 I0000 00:00:1721359896.208749 50782 cuda_executor.cc:1015] successful NUMA node read from SysFS had negative value (-1), but there must be at least one NUMA node, so returning NUMA node zero. See more at https://github.com/torvalds/linux/blob/v6.0/Documentation/ABI/testing/sysfs-bus-pci#L344-L355 I0000 00:00:1721359896.212317 50782 cuda_executor.cc:1015] successful NUMA node read from SysFS had negative value (-1), but there must be at least one NUMA node, so returning NUMA node zero. See more at https://github.com/torvalds/linux/blob/v6.0/Documentation/ABI/testing/sysfs-bus-pci#L344-L355 I0000 00:00:1721359896.215836 50782 cuda_executor.cc:1015] successful NUMA node read from SysFS had negative value (-1), but there must be at least one NUMA node, so returning NUMA node zero. See more at https://github.com/torvalds/linux/blob/v6.0/Documentation/ABI/testing/sysfs-bus-pci#L344-L355 I0000 00:00:1721359896.227569 50782 cuda_executor.cc:1015] successful NUMA node read from SysFS had negative value (-1), but there must be at least one NUMA node, so returning NUMA node zero. See more at https://github.com/torvalds/linux/blob/v6.0/Documentation/ABI/testing/sysfs-bus-pci#L344-L355 I0000 00:00:1721359896.231061 50782 cuda_executor.cc:1015] successful NUMA node read from SysFS had negative value (-1), but there must be at least one NUMA node, so returning NUMA node zero. See more at https://github.com/torvalds/linux/blob/v6.0/Documentation/ABI/testing/sysfs-bus-pci#L344-L355 I0000 00:00:1721359896.234321 50782 cuda_executor.cc:1015] successful NUMA node read from SysFS had negative value (-1), but there must be at least one NUMA node, so returning NUMA node zero. See more at https://github.com/torvalds/linux/blob/v6.0/Documentation/ABI/testing/sysfs-bus-pci#L344-L355 I0000 00:00:1721359896.237629 50782 cuda_executor.cc:1015] successful NUMA node read from SysFS had negative value (-1), but there must be at least one NUMA node, so returning NUMA node zero. See more at https://github.com/torvalds/linux/blob/v6.0/Documentation/ABI/testing/sysfs-bus-pci#L344-L355 I0000 00:00:1721359896.240954 50782 cuda_executor.cc:1015] successful NUMA node read from SysFS had negative value (-1), but there must be at least one NUMA node, so returning NUMA node zero. See more at https://github.com/torvalds/linux/blob/v6.0/Documentation/ABI/testing/sysfs-bus-pci#L344-L355 I0000 00:00:1721359896.244377 50782 cuda_executor.cc:1015] successful NUMA node read from SysFS had negative value (-1), but there must be at least one NUMA node, so returning NUMA node zero. See more at https://github.com/torvalds/linux/blob/v6.0/Documentation/ABI/testing/sysfs-bus-pci#L344-L355 I0000 00:00:1721359896.247660 50782 cuda_executor.cc:1015] successful NUMA node read from SysFS had negative value (-1), but there must be at least one NUMA node, so returning NUMA node zero. See more at https://github.com/torvalds/linux/blob/v6.0/Documentation/ABI/testing/sysfs-bus-pci#L344-L355 I0000 00:00:1721359896.251030 50782 cuda_executor.cc:1015] successful NUMA node read from SysFS had negative value (-1), but there must be at least one NUMA node, so returning NUMA node zero. See more at https://github.com/torvalds/linux/blob/v6.0/Documentation/ABI/testing/sysfs-bus-pci#L344-L355 I0000 00:00:1721359897.501529 50782 cuda_executor.cc:1015] successful NUMA node read from SysFS had negative value (-1), but there must be at least one NUMA node, so returning NUMA node zero. See more at https://github.com/torvalds/linux/blob/v6.0/Documentation/ABI/testing/sysfs-bus-pci#L344-L355 I0000 00:00:1721359897.503601 50782 cuda_executor.cc:1015] successful NUMA node read from SysFS had negative value (-1), but there must be at least one NUMA node, so returning NUMA node zero. See more at https://github.com/torvalds/linux/blob/v6.0/Documentation/ABI/testing/sysfs-bus-pci#L344-L355 I0000 00:00:1721359897.505574 50782 cuda_executor.cc:1015] successful NUMA node read from SysFS had negative value (-1), but there must be at least one NUMA node, so returning NUMA node zero. See more at https://github.com/torvalds/linux/blob/v6.0/Documentation/ABI/testing/sysfs-bus-pci#L344-L355 I0000 00:00:1721359897.507627 50782 cuda_executor.cc:1015] successful NUMA node read from SysFS had negative value (-1), but there must be at least one NUMA node, so returning NUMA node zero. See more at https://github.com/torvalds/linux/blob/v6.0/Documentation/ABI/testing/sysfs-bus-pci#L344-L355 I0000 00:00:1721359897.509728 50782 cuda_executor.cc:1015] successful NUMA node read from SysFS had negative value (-1), but there must be at least one NUMA node, so returning NUMA node zero. See more at https://github.com/torvalds/linux/blob/v6.0/Documentation/ABI/testing/sysfs-bus-pci#L344-L355 I0000 00:00:1721359897.511651 50782 cuda_executor.cc:1015] successful NUMA node read from SysFS had negative value (-1), but there must be at least one NUMA node, so returning NUMA node zero. See more at https://github.com/torvalds/linux/blob/v6.0/Documentation/ABI/testing/sysfs-bus-pci#L344-L355 I0000 00:00:1721359897.513501 50782 cuda_executor.cc:1015] successful NUMA node read from SysFS had negative value (-1), but there must be at least one NUMA node, so returning NUMA node zero. See more at https://github.com/torvalds/linux/blob/v6.0/Documentation/ABI/testing/sysfs-bus-pci#L344-L355 I0000 00:00:1721359897.515455 50782 cuda_executor.cc:1015] successful NUMA node read from SysFS had negative value (-1), but there must be at least one NUMA node, so returning NUMA node zero. See more at https://github.com/torvalds/linux/blob/v6.0/Documentation/ABI/testing/sysfs-bus-pci#L344-L355 I0000 00:00:1721359897.517398 50782 cuda_executor.cc:1015] successful NUMA node read from SysFS had negative value (-1), but there must be at least one NUMA node, so returning NUMA node zero. See more at https://github.com/torvalds/linux/blob/v6.0/Documentation/ABI/testing/sysfs-bus-pci#L344-L355 I0000 00:00:1721359897.519316 50782 cuda_executor.cc:1015] successful NUMA node read from SysFS had negative value (-1), but there must be at least one NUMA node, so returning NUMA node zero. See more at https://github.com/torvalds/linux/blob/v6.0/Documentation/ABI/testing/sysfs-bus-pci#L344-L355 I0000 00:00:1721359897.521179 50782 cuda_executor.cc:1015] successful NUMA node read from SysFS had negative value (-1), but there must be at least one NUMA node, so returning NUMA node zero. See more at https://github.com/torvalds/linux/blob/v6.0/Documentation/ABI/testing/sysfs-bus-pci#L344-L355 I0000 00:00:1721359897.523155 50782 cuda_executor.cc:1015] successful NUMA node read from SysFS had negative value (-1), but there must be at least one NUMA node, so returning NUMA node zero. See more at https://github.com/torvalds/linux/blob/v6.0/Documentation/ABI/testing/sysfs-bus-pci#L344-L355 I0000 00:00:1721359897.561414 50782 cuda_executor.cc:1015] successful NUMA node read from SysFS had negative value (-1), but there must be at least one NUMA node, so returning NUMA node zero. See more at https://github.com/torvalds/linux/blob/v6.0/Documentation/ABI/testing/sysfs-bus-pci#L344-L355 I0000 00:00:1721359897.563429 50782 cuda_executor.cc:1015] successful NUMA node read from SysFS had negative value (-1), but there must be at least one NUMA node, so returning NUMA node zero. See more at https://github.com/torvalds/linux/blob/v6.0/Documentation/ABI/testing/sysfs-bus-pci#L344-L355 I0000 00:00:1721359897.565345 50782 cuda_executor.cc:1015] successful NUMA node read from SysFS had negative value (-1), but there must be at least one NUMA node, so returning NUMA node zero. See more at https://github.com/torvalds/linux/blob/v6.0/Documentation/ABI/testing/sysfs-bus-pci#L344-L355 I0000 00:00:1721359897.567376 50782 cuda_executor.cc:1015] successful NUMA node read from SysFS had negative value (-1), but there must be at least one NUMA node, so returning NUMA node zero. See more at https://github.com/torvalds/linux/blob/v6.0/Documentation/ABI/testing/sysfs-bus-pci#L344-L355 I0000 00:00:1721359897.569349 50782 cuda_executor.cc:1015] successful NUMA node read from SysFS had negative value (-1), but there must be at least one NUMA node, so returning NUMA node zero. See more at https://github.com/torvalds/linux/blob/v6.0/Documentation/ABI/testing/sysfs-bus-pci#L344-L355 I0000 00:00:1721359897.571295 50782 cuda_executor.cc:1015] successful NUMA node read from SysFS had negative value (-1), but there must be at least one NUMA node, so returning NUMA node zero. See more at https://github.com/torvalds/linux/blob/v6.0/Documentation/ABI/testing/sysfs-bus-pci#L344-L355 I0000 00:00:1721359897.573186 50782 cuda_executor.cc:1015] successful NUMA node read from SysFS had negative value (-1), but there must be at least one NUMA node, so returning NUMA node zero. See more at https://github.com/torvalds/linux/blob/v6.0/Documentation/ABI/testing/sysfs-bus-pci#L344-L355 I0000 00:00:1721359897.575173 50782 cuda_executor.cc:1015] successful NUMA node read from SysFS had negative value (-1), but there must be at least one NUMA node, so returning NUMA node zero. See more at https://github.com/torvalds/linux/blob/v6.0/Documentation/ABI/testing/sysfs-bus-pci#L344-L355 I0000 00:00:1721359897.577153 50782 cuda_executor.cc:1015] successful NUMA node read from SysFS had negative value (-1), but there must be at least one NUMA node, so returning NUMA node zero. See more at https://github.com/torvalds/linux/blob/v6.0/Documentation/ABI/testing/sysfs-bus-pci#L344-L355 I0000 00:00:1721359897.579658 50782 cuda_executor.cc:1015] successful NUMA node read from SysFS had negative value (-1), but there must be at least one NUMA node, so returning NUMA node zero. See more at https://github.com/torvalds/linux/blob/v6.0/Documentation/ABI/testing/sysfs-bus-pci#L344-L355 I0000 00:00:1721359897.581976 50782 cuda_executor.cc:1015] successful NUMA node read from SysFS had negative value (-1), but there must be at least one NUMA node, so returning NUMA node zero. See more at https://github.com/torvalds/linux/blob/v6.0/Documentation/ABI/testing/sysfs-bus-pci#L344-L355 I0000 00:00:1721359897.584436 50782 cuda_executor.cc:1015] successful NUMA node read from SysFS had negative value (-1), but there must be at least one NUMA node, so returning NUMA node zero. See more at https://github.com/torvalds/linux/blob/v6.0/Documentation/ABI/testing/sysfs-bus-pci#L344-L355

plt.figure()

plt.imshow(sample_image)

<matplotlib.image.AxesImage at 0x7f7f04ab4fa0>

You need to separate real building facade images from the architecture label images—all of which will be of size 256 x 256.

Define a function that loads image files and outputs two image tensors:

def load(image_file):

# Read and decode an image file to a uint8 tensor

image = tf.io.read_file(image_file)

image = tf.io.decode_jpeg(image)

# Split each image tensor into two tensors:

# - one with a real building facade image

# - one with an architecture label image

w = tf.shape(image)[1]

w = w // 2

input_image = image[:, w:, :]

real_image = image[:, :w, :]

# Convert both images to float32 tensors

input_image = tf.cast(input_image, tf.float32)

real_image = tf.cast(real_image, tf.float32)

return input_image, real_image

Plot a sample of the input (architecture label image) and real (building facade photo) images:

inp, re = load(str(PATH / 'train/100.jpg'))

# Casting to int for matplotlib to display the images

plt.figure()

plt.imshow(inp / 255.0)

plt.figure()

plt.imshow(re / 255.0)

<matplotlib.image.AxesImage at 0x7f7ef010cdc0>

As described in the pix2pix paper, you need to apply random jittering and mirroring to preprocess the training set.

Define several functions that:

- Resize each

256 x 256image to a larger height and width—286 x 286. - Randomly crop it back to

256 x 256. - Randomly flip the image horizontally i.e., left to right (random mirroring).

- Normalize the images to the

[-1, 1]range.

# The facade training set consist of 400 images

BUFFER_SIZE = 400

# The batch size of 1 produced better results for the U-Net in the original pix2pix experiment

BATCH_SIZE = 1

# Each image is 256x256 in size

IMG_WIDTH = 256

IMG_HEIGHT = 256

def resize(input_image, real_image, height, width):

input_image = tf.image.resize(input_image, [height, width],

method=tf.image.ResizeMethod.NEAREST_NEIGHBOR)

real_image = tf.image.resize(real_image, [height, width],

method=tf.image.ResizeMethod.NEAREST_NEIGHBOR)

return input_image, real_image

def random_crop(input_image, real_image):

stacked_image = tf.stack([input_image, real_image], axis=0)

cropped_image = tf.image.random_crop(

stacked_image, size=[2, IMG_HEIGHT, IMG_WIDTH, 3])

return cropped_image[0], cropped_image[1]

# Normalizing the images to [-1, 1]

def normalize(input_image, real_image):

input_image = (input_image / 127.5) - 1

real_image = (real_image / 127.5) - 1

return input_image, real_image

@tf.function()

def random_jitter(input_image, real_image):

# Resizing to 286x286

input_image, real_image = resize(input_image, real_image, 286, 286)

# Random cropping back to 256x256

input_image, real_image = random_crop(input_image, real_image)

if tf.random.uniform(()) > 0.5:

# Random mirroring

input_image = tf.image.flip_left_right(input_image)

real_image = tf.image.flip_left_right(real_image)

return input_image, real_image

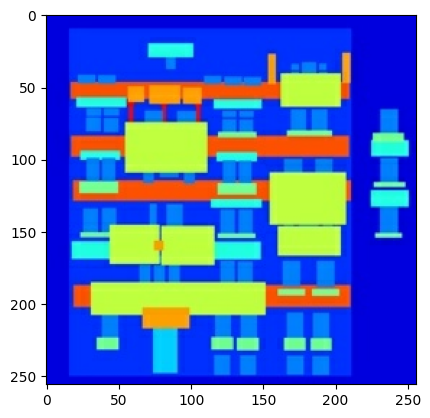

You can inspect some of the preprocessed output:

plt.figure(figsize=(6, 6))

for i in range(4):

rj_inp, rj_re = random_jitter(inp, re)

plt.subplot(2, 2, i + 1)

plt.imshow(rj_inp / 255.0)

plt.axis('off')

plt.show()

Having checked that the loading and preprocessing works, let's define a couple of helper functions that load and preprocess the training and test sets:

def load_image_train(image_file):

input_image, real_image = load(image_file)

input_image, real_image = random_jitter(input_image, real_image)

input_image, real_image = normalize(input_image, real_image)

return input_image, real_image

def load_image_test(image_file):

input_image, real_image = load(image_file)

input_image, real_image = resize(input_image, real_image,

IMG_HEIGHT, IMG_WIDTH)

input_image, real_image = normalize(input_image, real_image)

return input_image, real_image

Build an input pipeline with tf.data

train_dataset = tf.data.Dataset.list_files(str(PATH / 'train/*.jpg'))

train_dataset = train_dataset.map(load_image_train,

num_parallel_calls=tf.data.AUTOTUNE)

train_dataset = train_dataset.shuffle(BUFFER_SIZE)

train_dataset = train_dataset.batch(BATCH_SIZE)

try:

test_dataset = tf.data.Dataset.list_files(str(PATH / 'test/*.jpg'))

except tf.errors.InvalidArgumentError:

test_dataset = tf.data.Dataset.list_files(str(PATH / 'val/*.jpg'))

test_dataset = test_dataset.map(load_image_test)

test_dataset = test_dataset.batch(BATCH_SIZE)

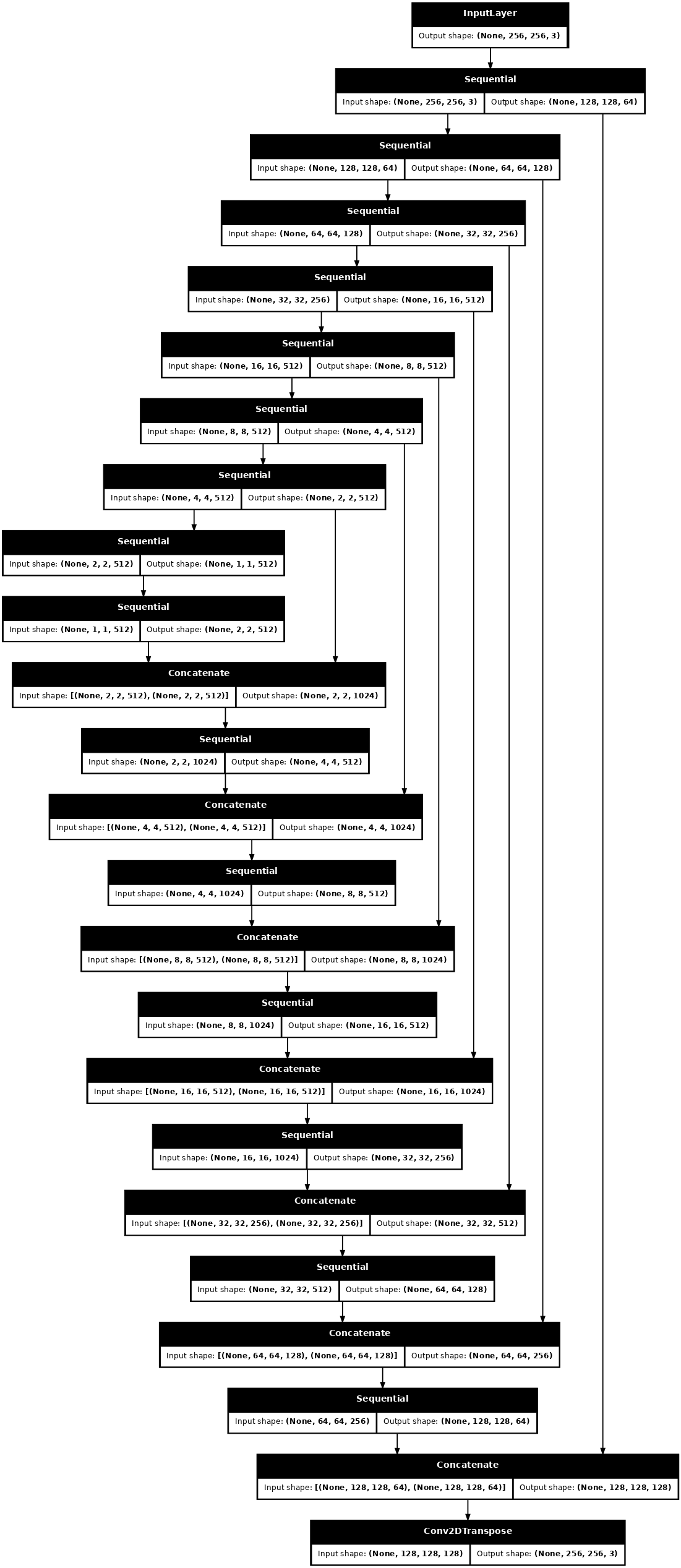

Build the generator

The generator of your pix2pix cGAN is a modified U-Net. A U-Net consists of an encoder (downsampler) and decoder (upsampler). (You can find out more about it in the Image segmentation tutorial and on the U-Net project website.)

- Each block in the encoder is: Convolution -> Batch normalization -> Leaky ReLU

- Each block in the decoder is: Transposed convolution -> Batch normalization -> Dropout (applied to the first 3 blocks) -> ReLU

- There are skip connections between the encoder and decoder (as in the U-Net).

Define the downsampler (encoder):

OUTPUT_CHANNELS = 3

def downsample(filters, size, apply_batchnorm=True):

initializer = tf.random_normal_initializer(0., 0.02)

result = tf.keras.Sequential()

result.add(

tf.keras.layers.Conv2D(filters, size, strides=2, padding='same',

kernel_initializer=initializer, use_bias=False))

if apply_batchnorm:

result.add(tf.keras.layers.BatchNormalization())

result.add(tf.keras.layers.LeakyReLU())

return result

down_model = downsample(3, 4)

down_result = down_model(tf.expand_dims(inp, 0))

print (down_result.shape)

(1, 128, 128, 3) W0000 00:00:1721359899.718320 50782 gpu_timer.cc:114] Skipping the delay kernel, measurement accuracy will be reduced W0000 00:00:1721359899.755095 50782 gpu_timer.cc:114] Skipping the delay kernel, measurement accuracy will be reduced W0000 00:00:1721359899.796418 50782 gpu_timer.cc:114] Skipping the delay kernel, measurement accuracy will be reduced W0000 00:00:1721359899.805108 50782 gpu_timer.cc:114] Skipping the delay kernel, measurement accuracy will be reduced W0000 00:00:1721359899.806298 50782 gpu_timer.cc:114] Skipping the delay kernel, measurement accuracy will be reduced W0000 00:00:1721359899.809605 50782 gpu_timer.cc:114] Skipping the delay kernel, measurement accuracy will be reduced W0000 00:00:1721359899.812724 50782 gpu_timer.cc:114] Skipping the delay kernel, measurement accuracy will be reduced W0000 00:00:1721359899.813982 50782 gpu_timer.cc:114] Skipping the delay kernel, measurement accuracy will be reduced W0000 00:00:1721359899.815258 50782 gpu_timer.cc:114] Skipping the delay kernel, measurement accuracy will be reduced W0000 00:00:1721359899.816498 50782 gpu_timer.cc:114] Skipping the delay kernel, measurement accuracy will be reduced W0000 00:00:1721359899.817651 50782 gpu_timer.cc:114] Skipping the delay kernel, measurement accuracy will be reduced W0000 00:00:1721359899.818795 50782 gpu_timer.cc:114] Skipping the delay kernel, measurement accuracy will be reduced W0000 00:00:1721359899.819977 50782 gpu_timer.cc:114] Skipping the delay kernel, measurement accuracy will be reduced W0000 00:00:1721359899.821169 50782 gpu_timer.cc:114] Skipping the delay kernel, measurement accuracy will be reduced

Define the upsampler (decoder):

def upsample(filters, size, apply_dropout=False):

initializer = tf.random_normal_initializer(0., 0.02)

result = tf.keras.Sequential()

result.add(

tf.keras.layers.Conv2DTranspose(filters, size, strides=2,

padding='same',

kernel_initializer=initializer,

use_bias=False))

result.add(tf.keras.layers.BatchNormalization())

if apply_dropout:

result.add(tf.keras.layers.Dropout(0.5))

result.add(tf.keras.layers.ReLU())

return result

up_model = upsample(3, 4)

up_result = up_model(down_result)

print (up_result.shape)

(1, 256, 256, 3) W0000 00:00:1721359899.956467 50782 gpu_timer.cc:114] Skipping the delay kernel, measurement accuracy will be reduced W0000 00:00:1721359899.966117 50782 gpu_timer.cc:114] Skipping the delay kernel, measurement accuracy will be reduced W0000 00:00:1721359899.967385 50782 gpu_timer.cc:114] Skipping the delay kernel, measurement accuracy will be reduced W0000 00:00:1721359899.968681 50782 gpu_timer.cc:114] Skipping the delay kernel, measurement accuracy will be reduced W0000 00:00:1721359900.032340 50782 gpu_timer.cc:114] Skipping the delay kernel, measurement accuracy will be reduced W0000 00:00:1721359900.034957 50782 gpu_timer.cc:114] Skipping the delay kernel, measurement accuracy will be reduced W0000 00:00:1721359900.061310 50782 gpu_timer.cc:114] Skipping the delay kernel, measurement accuracy will be reduced W0000 00:00:1721359900.072420 50782 gpu_timer.cc:114] Skipping the delay kernel, measurement accuracy will be reduced

Define the generator with the downsampler and the upsampler:

def Generator():

inputs = tf.keras.layers.Input(shape=[256, 256, 3])

down_stack = [

downsample(64, 4, apply_batchnorm=False), # (batch_size, 128, 128, 64)

downsample(128, 4), # (batch_size, 64, 64, 128)

downsample(256, 4), # (batch_size, 32, 32, 256)

downsample(512, 4), # (batch_size, 16, 16, 512)

downsample(512, 4), # (batch_size, 8, 8, 512)

downsample(512, 4), # (batch_size, 4, 4, 512)

downsample(512, 4), # (batch_size, 2, 2, 512)

downsample(512, 4), # (batch_size, 1, 1, 512)

]

up_stack = [

upsample(512, 4, apply_dropout=True), # (batch_size, 2, 2, 1024)

upsample(512, 4, apply_dropout=True), # (batch_size, 4, 4, 1024)

upsample(512, 4, apply_dropout=True), # (batch_size, 8, 8, 1024)

upsample(512, 4), # (batch_size, 16, 16, 1024)

upsample(256, 4), # (batch_size, 32, 32, 512)

upsample(128, 4), # (batch_size, 64, 64, 256)

upsample(64, 4), # (batch_size, 128, 128, 128)

]

initializer = tf.random_normal_initializer(0., 0.02)

last = tf.keras.layers.Conv2DTranspose(OUTPUT_CHANNELS, 4,

strides=2,

padding='same',

kernel_initializer=initializer,

activation='tanh') # (batch_size, 256, 256, 3)

x = inputs

# Downsampling through the model

skips = []

for down in down_stack:

x = down(x)

skips.append(x)

skips = reversed(skips[:-1])

# Upsampling and establishing the skip connections

for up, skip in zip(up_stack, skips):

x = up(x)

x = tf.keras.layers.Concatenate()([x, skip])

x = last(x)

return tf.keras.Model(inputs=inputs, outputs=x)

Visualize the generator model architecture:

generator = Generator()

tf.keras.utils.plot_model(generator, show_shapes=True, dpi=64)

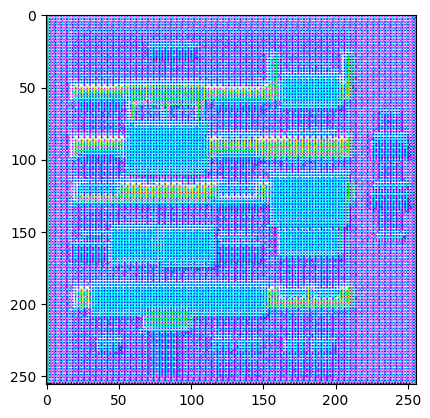

Test the generator:

gen_output = generator(inp[tf.newaxis, ...], training=False)

plt.imshow(gen_output[0, ...])

W0000 00:00:1721359900.671719 50782 gpu_timer.cc:114] Skipping the delay kernel, measurement accuracy will be reduced W0000 00:00:1721359900.673330 50782 gpu_timer.cc:114] Skipping the delay kernel, measurement accuracy will be reduced W0000 00:00:1721359900.674519 50782 gpu_timer.cc:114] Skipping the delay kernel, measurement accuracy will be reduced W0000 00:00:1721359900.675704 50782 gpu_timer.cc:114] Skipping the delay kernel, measurement accuracy will be reduced W0000 00:00:1721359900.676891 50782 gpu_timer.cc:114] Skipping the delay kernel, measurement accuracy will be reduced W0000 00:00:1721359900.678060 50782 gpu_timer.cc:114] Skipping the delay kernel, measurement accuracy will be reduced W0000 00:00:1721359900.679239 50782 gpu_timer.cc:114] Skipping the delay kernel, measurement accuracy will be reduced W0000 00:00:1721359900.680422 50782 gpu_timer.cc:114] Skipping the delay kernel, measurement accuracy will be reduced W0000 00:00:1721359900.681688 50782 gpu_timer.cc:114] Skipping the delay kernel, measurement accuracy will be reduced W0000 00:00:1721359900.682914 50782 gpu_timer.cc:114] Skipping the delay kernel, measurement accuracy will be reduced W0000 00:00:1721359900.684134 50782 gpu_timer.cc:114] Skipping the delay kernel, measurement accuracy will be reduced W0000 00:00:1721359900.685368 50782 gpu_timer.cc:114] Skipping the delay kernel, measurement accuracy will be reduced W0000 00:00:1721359900.686594 50782 gpu_timer.cc:114] Skipping the delay kernel, measurement accuracy will be reduced W0000 00:00:1721359900.696615 50782 gpu_timer.cc:114] Skipping the delay kernel, measurement accuracy will be reduced W0000 00:00:1721359900.698253 50782 gpu_timer.cc:114] Skipping the delay kernel, measurement accuracy will be reduced W0000 00:00:1721359900.699868 50782 gpu_timer.cc:114] Skipping the delay kernel, measurement accuracy will be reduced W0000 00:00:1721359900.701514 50782 gpu_timer.cc:114] Skipping the delay kernel, measurement accuracy will be reduced W0000 00:00:1721359900.703176 50782 gpu_timer.cc:114] Skipping the delay kernel, measurement accuracy will be reduced W0000 00:00:1721359900.704889 50782 gpu_timer.cc:114] Skipping the delay kernel, measurement accuracy will be reduced W0000 00:00:1721359900.706617 50782 gpu_timer.cc:114] Skipping the delay kernel, measurement accuracy will be reduced W0000 00:00:1721359900.708387 50782 gpu_timer.cc:114] Skipping the delay kernel, measurement accuracy will be reduced W0000 00:00:1721359900.710162 50782 gpu_timer.cc:114] Skipping the delay kernel, measurement accuracy will be reduced W0000 00:00:1721359900.711946 50782 gpu_timer.cc:114] Skipping the delay kernel, measurement accuracy will be reduced W0000 00:00:1721359900.729505 50782 gpu_timer.cc:114] Skipping the delay kernel, measurement accuracy will be reduced W0000 00:00:1721359900.731473 50782 gpu_timer.cc:114] Skipping the delay kernel, measurement accuracy will be reduced W0000 00:00:1721359900.733603 50782 gpu_timer.cc:114] Skipping the delay kernel, measurement accuracy will be reduced W0000 00:00:1721359900.735796 50782 gpu_timer.cc:114] Skipping the delay kernel, measurement accuracy will be reduced W0000 00:00:1721359900.749459 50782 gpu_timer.cc:114] Skipping the delay kernel, measurement accuracy will be reduced W0000 00:00:1721359900.751191 50782 gpu_timer.cc:114] Skipping the delay kernel, measurement accuracy will be reduced W0000 00:00:1721359900.752880 50782 gpu_timer.cc:114] Skipping the delay kernel, measurement accuracy will be reduced W0000 00:00:1721359900.754633 50782 gpu_timer.cc:114] Skipping the delay kernel, measurement accuracy will be reduced W0000 00:00:1721359900.756351 50782 gpu_timer.cc:114] Skipping the delay kernel, measurement accuracy will be reduced W0000 00:00:1721359900.758176 50782 gpu_timer.cc:114] Skipping the delay kernel, measurement accuracy will be reduced W0000 00:00:1721359900.759978 50782 gpu_timer.cc:114] Skipping the delay kernel, measurement accuracy will be reduced W0000 00:00:1721359900.761797 50782 gpu_timer.cc:114] Skipping the delay kernel, measurement accuracy will be reduced W0000 00:00:1721359900.763982 50782 gpu_timer.cc:114] Skipping the delay kernel, measurement accuracy will be reduced W0000 00:00:1721359900.765995 50782 gpu_timer.cc:114] Skipping the delay kernel, measurement accuracy will be reduced W0000 00:00:1721359900.768182 50782 gpu_timer.cc:114] Skipping the delay kernel, measurement accuracy will be reduced W0000 00:00:1721359900.770197 50782 gpu_timer.cc:114] Skipping the delay kernel, measurement accuracy will be reduced W0000 00:00:1721359900.772340 50782 gpu_timer.cc:114] Skipping the delay kernel, measurement accuracy will be reduced W0000 00:00:1721359900.774476 50782 gpu_timer.cc:114] Skipping the delay kernel, measurement accuracy will be reduced W0000 00:00:1721359900.788140 50782 gpu_timer.cc:114] Skipping the delay kernel, measurement accuracy will be reduced W0000 00:00:1721359900.789908 50782 gpu_timer.cc:114] Skipping the delay kernel, measurement accuracy will be reduced W0000 00:00:1721359900.796061 50782 gpu_timer.cc:114] Skipping the delay kernel, measurement accuracy will be reduced W0000 00:00:1721359900.798294 50782 gpu_timer.cc:114] Skipping the delay kernel, measurement accuracy will be reduced W0000 00:00:1721359900.800387 50782 gpu_timer.cc:114] Skipping the delay kernel, measurement accuracy will be reduced W0000 00:00:1721359900.802633 50782 gpu_timer.cc:114] Skipping the delay kernel, measurement accuracy will be reduced W0000 00:00:1721359900.804938 50782 gpu_timer.cc:114] Skipping the delay kernel, measurement accuracy will be reduced W0000 00:00:1721359900.807243 50782 gpu_timer.cc:114] Skipping the delay kernel, measurement accuracy will be reduced W0000 00:00:1721359900.809565 50782 gpu_timer.cc:114] Skipping the delay kernel, measurement accuracy will be reduced W0000 00:00:1721359900.811753 50782 gpu_timer.cc:114] Skipping the delay kernel, measurement accuracy will be reduced W0000 00:00:1721359900.814151 50782 gpu_timer.cc:114] Skipping the delay kernel, measurement accuracy will be reduced W0000 00:00:1721359900.816508 50782 gpu_timer.cc:114] Skipping the delay kernel, measurement accuracy will be reduced W0000 00:00:1721359900.818862 50782 gpu_timer.cc:114] Skipping the delay kernel, measurement accuracy will be reduced W0000 00:00:1721359900.821615 50782 gpu_timer.cc:114] Skipping the delay kernel, measurement accuracy will be reduced W0000 00:00:1721359900.824535 50782 gpu_timer.cc:114] Skipping the delay kernel, measurement accuracy will be reduced W0000 00:00:1721359900.840491 50782 gpu_timer.cc:114] Skipping the delay kernel, measurement accuracy will be reduced W0000 00:00:1721359900.842607 50782 gpu_timer.cc:114] Skipping the delay kernel, measurement accuracy will be reduced W0000 00:00:1721359900.844811 50782 gpu_timer.cc:114] Skipping the delay kernel, measurement accuracy will be reduced W0000 00:00:1721359900.847090 50782 gpu_timer.cc:114] Skipping the delay kernel, measurement accuracy will be reduced W0000 00:00:1721359900.858361 50782 gpu_timer.cc:114] Skipping the delay kernel, measurement accuracy will be reduced W0000 00:00:1721359900.861383 50782 gpu_timer.cc:114] Skipping the delay kernel, measurement accuracy will be reduced W0000 00:00:1721359900.864640 50782 gpu_timer.cc:114] Skipping the delay kernel, measurement accuracy will be reduced W0000 00:00:1721359900.868174 50782 gpu_timer.cc:114] Skipping the delay kernel, measurement accuracy will be reduced W0000 00:00:1721359900.871715 50782 gpu_timer.cc:114] Skipping the delay kernel, measurement accuracy will be reduced W0000 00:00:1721359900.875222 50782 gpu_timer.cc:114] Skipping the delay kernel, measurement accuracy will be reduced W0000 00:00:1721359900.878802 50782 gpu_timer.cc:114] Skipping the delay kernel, measurement accuracy will be reduced W0000 00:00:1721359900.882365 50782 gpu_timer.cc:114] Skipping the delay kernel, measurement accuracy will be reduced W0000 00:00:1721359900.885920 50782 gpu_timer.cc:114] Skipping the delay kernel, measurement accuracy will be reduced W0000 00:00:1721359900.890341 50782 gpu_timer.cc:114] Skipping the delay kernel, measurement accuracy will be reduced W0000 00:00:1721359900.895064 50782 gpu_timer.cc:114] Skipping the delay kernel, measurement accuracy will be reduced W0000 00:00:1721359900.908674 50782 gpu_timer.cc:114] Skipping the delay kernel, measurement accuracy will be reduced W0000 00:00:1721359900.910771 50782 gpu_timer.cc:114] Skipping the delay kernel, measurement accuracy will be reduced W0000 00:00:1721359900.912954 50782 gpu_timer.cc:114] Skipping the delay kernel, measurement accuracy will be reduced W0000 00:00:1721359900.915237 50782 gpu_timer.cc:114] Skipping the delay kernel, measurement accuracy will be reduced W0000 00:00:1721359900.918141 50782 gpu_timer.cc:114] Skipping the delay kernel, measurement accuracy will be reduced W0000 00:00:1721359900.921392 50782 gpu_timer.cc:114] Skipping the delay kernel, measurement accuracy will be reduced W0000 00:00:1721359900.928162 50782 gpu_timer.cc:114] Skipping the delay kernel, measurement accuracy will be reduced W0000 00:00:1721359900.931566 50782 gpu_timer.cc:114] Skipping the delay kernel, measurement accuracy will be reduced W0000 00:00:1721359900.934965 50782 gpu_timer.cc:114] Skipping the delay kernel, measurement accuracy will be reduced W0000 00:00:1721359900.938568 50782 gpu_timer.cc:114] Skipping the delay kernel, measurement accuracy will be reduced W0000 00:00:1721359900.942196 50782 gpu_timer.cc:114] Skipping the delay kernel, measurement accuracy will be reduced W0000 00:00:1721359900.945744 50782 gpu_timer.cc:114] Skipping the delay kernel, measurement accuracy will be reduced W0000 00:00:1721359900.949298 50782 gpu_timer.cc:114] Skipping the delay kernel, measurement accuracy will be reduced W0000 00:00:1721359900.953714 50782 gpu_timer.cc:114] Skipping the delay kernel, measurement accuracy will be reduced W0000 00:00:1721359900.958428 50782 gpu_timer.cc:114] Skipping the delay kernel, measurement accuracy will be reduced W0000 00:00:1721359900.969329 50782 gpu_timer.cc:114] Skipping the delay kernel, measurement accuracy will be reduced W0000 00:00:1721359900.971407 50782 gpu_timer.cc:114] Skipping the delay kernel, measurement accuracy will be reduced W0000 00:00:1721359900.973587 50782 gpu_timer.cc:114] Skipping the delay kernel, measurement accuracy will be reduced W0000 00:00:1721359900.975864 50782 gpu_timer.cc:114] Skipping the delay kernel, measurement accuracy will be reduced W0000 00:00:1721359900.978700 50782 gpu_timer.cc:114] Skipping the delay kernel, measurement accuracy will be reduced W0000 00:00:1721359900.981962 50782 gpu_timer.cc:114] Skipping the delay kernel, measurement accuracy will be reduced W0000 00:00:1721359900.984143 50782 gpu_timer.cc:114] Skipping the delay kernel, measurement accuracy will be reduced W0000 00:00:1721359900.987315 50782 gpu_timer.cc:114] Skipping the delay kernel, measurement accuracy will be reduced W0000 00:00:1721359900.990492 50782 gpu_timer.cc:114] Skipping the delay kernel, measurement accuracy will be reduced W0000 00:00:1721359900.993934 50782 gpu_timer.cc:114] Skipping the delay kernel, measurement accuracy will be reduced W0000 00:00:1721359900.997381 50782 gpu_timer.cc:114] Skipping the delay kernel, measurement accuracy will be reduced W0000 00:00:1721359900.999762 50782 gpu_timer.cc:114] Skipping the delay kernel, measurement accuracy will be reduced W0000 00:00:1721359901.001716 50782 gpu_timer.cc:114] Skipping the delay kernel, measurement accuracy will be reduced W0000 00:00:1721359901.004090 50782 gpu_timer.cc:114] Skipping the delay kernel, measurement accuracy will be reduced W0000 00:00:1721359901.011318 50782 gpu_timer.cc:114] Skipping the delay kernel, measurement accuracy will be reduced W0000 00:00:1721359901.012604 50782 gpu_timer.cc:114] Skipping the delay kernel, measurement accuracy will be reduced W0000 00:00:1721359901.013908 50782 gpu_timer.cc:114] Skipping the delay kernel, measurement accuracy will be reduced W0000 00:00:1721359901.016285 50782 gpu_timer.cc:114] Skipping the delay kernel, measurement accuracy will be reduced W0000 00:00:1721359901.022102 50782 gpu_timer.cc:114] Skipping the delay kernel, measurement accuracy will be reduced W0000 00:00:1721359901.023068 50782 gpu_timer.cc:114] Skipping the delay kernel, measurement accuracy will be reduced W0000 00:00:1721359901.023931 50782 gpu_timer.cc:114] Skipping the delay kernel, measurement accuracy will be reduced W0000 00:00:1721359901.024967 50782 gpu_timer.cc:114] Skipping the delay kernel, measurement accuracy will be reduced W0000 00:00:1721359901.026179 50782 gpu_timer.cc:114] Skipping the delay kernel, measurement accuracy will be reduced W0000 00:00:1721359901.028715 50782 gpu_timer.cc:114] Skipping the delay kernel, measurement accuracy will be reduced W0000 00:00:1721359901.039716 50782 gpu_timer.cc:114] Skipping the delay kernel, measurement accuracy will be reduced W0000 00:00:1721359901.052693 50782 gpu_timer.cc:114] Skipping the delay kernel, measurement accuracy will be reduced W0000 00:00:1721359901.104314 50782 gpu_timer.cc:114] Skipping the delay kernel, measurement accuracy will be reduced W0000 00:00:1721359901.105795 50782 gpu_timer.cc:114] Skipping the delay kernel, measurement accuracy will be reduced W0000 00:00:1721359901.106827 50782 gpu_timer.cc:114] Skipping the delay kernel, measurement accuracy will be reduced W0000 00:00:1721359901.108382 50782 gpu_timer.cc:114] Skipping the delay kernel, measurement accuracy will be reduced W0000 00:00:1721359901.110367 50782 gpu_timer.cc:114] Skipping the delay kernel, measurement accuracy will be reduced W0000 00:00:1721359901.117162 50782 gpu_timer.cc:114] Skipping the delay kernel, measurement accuracy will be reduced W0000 00:00:1721359901.146530 50782 gpu_timer.cc:114] Skipping the delay kernel, measurement accuracy will be reduced W0000 00:00:1721359901.147841 50782 gpu_timer.cc:114] Skipping the delay kernel, measurement accuracy will be reduced W0000 00:00:1721359901.148749 50782 gpu_timer.cc:114] Skipping the delay kernel, measurement accuracy will be reduced W0000 00:00:1721359901.150093 50782 gpu_timer.cc:114] Skipping the delay kernel, measurement accuracy will be reduced W0000 00:00:1721359901.151783 50782 gpu_timer.cc:114] Skipping the delay kernel, measurement accuracy will be reduced W0000 00:00:1721359901.157980 50782 gpu_timer.cc:114] Skipping the delay kernel, measurement accuracy will be reduced W0000 00:00:1721359901.187361 50782 gpu_timer.cc:114] Skipping the delay kernel, measurement accuracy will be reduced W0000 00:00:1721359901.188499 50782 gpu_timer.cc:114] Skipping the delay kernel, measurement accuracy will be reduced W0000 00:00:1721359901.189576 50782 gpu_timer.cc:114] Skipping the delay kernel, measurement accuracy will be reduced W0000 00:00:1721359901.190942 50782 gpu_timer.cc:114] Skipping the delay kernel, measurement accuracy will be reduced W0000 00:00:1721359901.192663 50782 gpu_timer.cc:114] Skipping the delay kernel, measurement accuracy will be reduced W0000 00:00:1721359901.215418 50782 gpu_timer.cc:114] Skipping the delay kernel, measurement accuracy will be reduced W0000 00:00:1721359901.236438 50782 gpu_timer.cc:114] Skipping the delay kernel, measurement accuracy will be reduced W0000 00:00:1721359901.261376 50782 gpu_timer.cc:114] Skipping the delay kernel, measurement accuracy will be reduced W0000 00:00:1721359901.291748 50782 gpu_timer.cc:114] Skipping the delay kernel, measurement accuracy will be reduced W0000 00:00:1721359901.293302 50782 gpu_timer.cc:114] Skipping the delay kernel, measurement accuracy will be reduced W0000 00:00:1721359901.294983 50782 gpu_timer.cc:114] Skipping the delay kernel, measurement accuracy will be reduced W0000 00:00:1721359901.296668 50782 gpu_timer.cc:114] Skipping the delay kernel, measurement accuracy will be reduced W0000 00:00:1721359901.298498 50782 gpu_timer.cc:114] Skipping the delay kernel, measurement accuracy will be reduced W0000 00:00:1721359901.322738 50782 gpu_timer.cc:114] Skipping the delay kernel, measurement accuracy will be reduced W0000 00:00:1721359901.369839 50782 gpu_timer.cc:114] Skipping the delay kernel, measurement accuracy will be reduced W0000 00:00:1721359901.371455 50782 gpu_timer.cc:114] Skipping the delay kernel, measurement accuracy will be reduced W0000 00:00:1721359901.373105 50782 gpu_timer.cc:114] Skipping the delay kernel, measurement accuracy will be reduced W0000 00:00:1721359901.374837 50782 gpu_timer.cc:114] Skipping the delay kernel, measurement accuracy will be reduced W0000 00:00:1721359901.376638 50782 gpu_timer.cc:114] Skipping the delay kernel, measurement accuracy will be reduced W0000 00:00:1721359901.388757 50782 gpu_timer.cc:114] Skipping the delay kernel, measurement accuracy will be reduced W0000 00:00:1721359901.417997 50782 gpu_timer.cc:114] Skipping the delay kernel, measurement accuracy will be reduced W0000 00:00:1721359901.419590 50782 gpu_timer.cc:114] Skipping the delay kernel, measurement accuracy will be reduced W0000 00:00:1721359901.421095 50782 gpu_timer.cc:114] Skipping the delay kernel, measurement accuracy will be reduced W0000 00:00:1721359901.422633 50782 gpu_timer.cc:114] Skipping the delay kernel, measurement accuracy will be reduced W0000 00:00:1721359901.431243 50782 gpu_timer.cc:114] Skipping the delay kernel, measurement accuracy will be reduced W0000 00:00:1721359901.434442 50782 gpu_timer.cc:114] Skipping the delay kernel, measurement accuracy will be reduced W0000 00:00:1721359901.452741 50782 gpu_timer.cc:114] Skipping the delay kernel, measurement accuracy will be reduced W0000 00:00:1721359901.453657 50782 gpu_timer.cc:114] Skipping the delay kernel, measurement accuracy will be reduced W0000 00:00:1721359901.454543 50782 gpu_timer.cc:114] Skipping the delay kernel, measurement accuracy will be reduced W0000 00:00:1721359901.455406 50782 gpu_timer.cc:114] Skipping the delay kernel, measurement accuracy will be reduced W0000 00:00:1721359901.457607 50782 gpu_timer.cc:114] Skipping the delay kernel, measurement accuracy will be reduced W0000 00:00:1721359901.459733 50782 gpu_timer.cc:114] Skipping the delay kernel, measurement accuracy will be reduced W0000 00:00:1721359901.463348 50782 gpu_timer.cc:114] Skipping the delay kernel, measurement accuracy will be reduced W0000 00:00:1721359901.470809 50782 gpu_timer.cc:114] Skipping the delay kernel, measurement accuracy will be reduced Clipping input data to the valid range for imshow with RGB data ([0..1] for floats or [0..255] for integers). Got range [-1.0..1.0]. <matplotlib.image.AxesImage at 0x7f7e7c1c19a0>

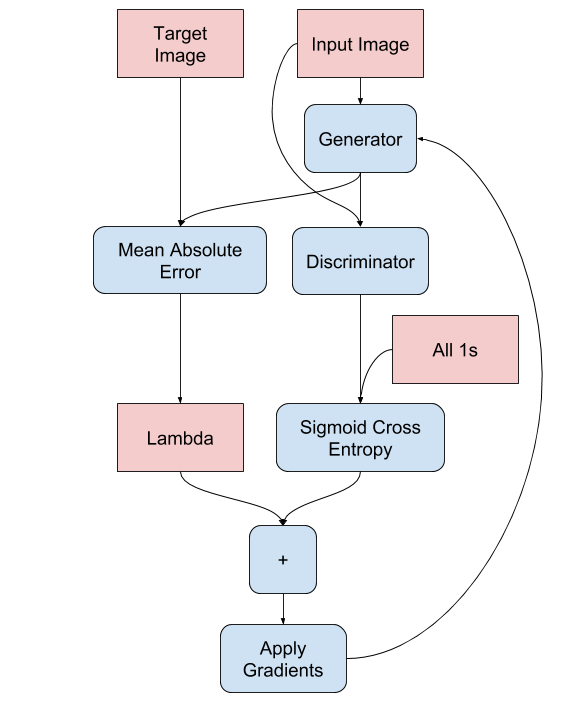

Define the generator loss

GANs learn a loss that adapts to the data, while cGANs learn a structured loss that penalizes a possible structure that differs from the network output and the target image, as described in the pix2pix paper.

- The generator loss is a sigmoid cross-entropy loss of the generated images and an array of ones.

- The pix2pix paper also mentions the L1 loss, which is a MAE (mean absolute error) between the generated image and the target image.

- This allows the generated image to become structurally similar to the target image.

- The formula to calculate the total generator loss is

gan_loss + LAMBDA * l1_loss, whereLAMBDA = 100. This value was decided by the authors of the paper.

LAMBDA = 100

loss_object = tf.keras.losses.BinaryCrossentropy(from_logits=True)

def generator_loss(disc_generated_output, gen_output, target):

gan_loss = loss_object(tf.ones_like(disc_generated_output), disc_generated_output)

# Mean absolute error

l1_loss = tf.reduce_mean(tf.abs(target - gen_output))

total_gen_loss = gan_loss + (LAMBDA * l1_loss)

return total_gen_loss, gan_loss, l1_loss

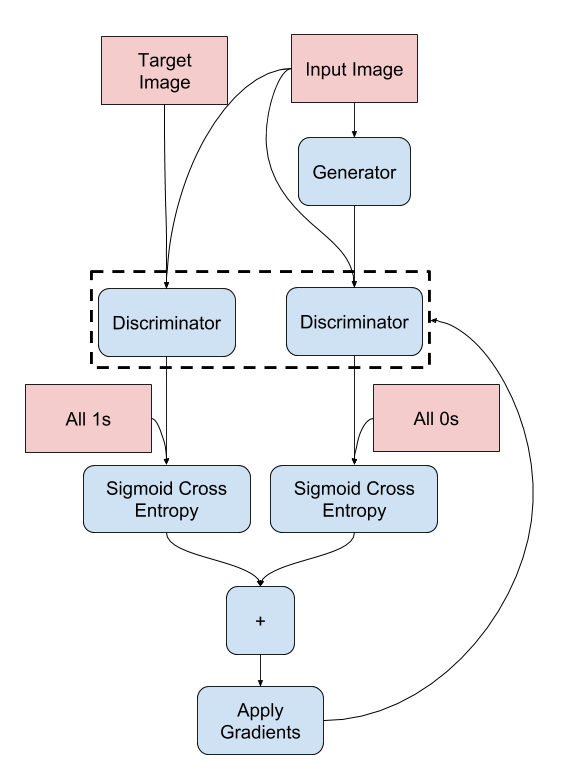

The training procedure for the generator is as follows:

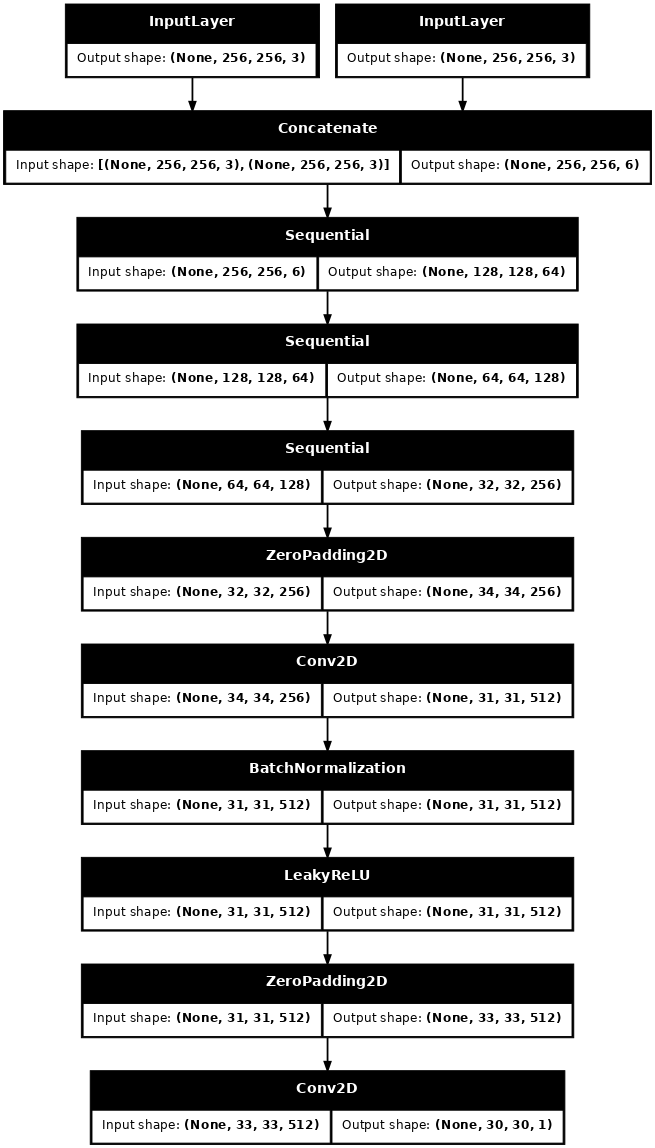

Build the discriminator

The discriminator in the pix2pix cGAN is a convolutional PatchGAN classifier—it tries to classify if each image patch is real or not real, as described in the pix2pix paper.

- Each block in the discriminator is: Convolution -> Batch normalization -> Leaky ReLU.

- The shape of the output after the last layer is

(batch_size, 30, 30, 1). - Each

30 x 30image patch of the output classifies a70 x 70portion of the input image. - The discriminator receives 2 inputs:

- The input image and the target image, which it should classify as real.

- The input image and the generated image (the output of the generator), which it should classify as fake.

- Use

tf.concat([inp, tar], axis=-1)to concatenate these 2 inputs together.

Let's define the discriminator:

def Discriminator():

initializer = tf.random_normal_initializer(0., 0.02)

inp = tf.keras.layers.Input(shape=[256, 256, 3], name='input_image')

tar = tf.keras.layers.Input(shape=[256, 256, 3], name='target_image')

x = tf.keras.layers.concatenate([inp, tar]) # (batch_size, 256, 256, channels*2)

down1 = downsample(64, 4, False)(x) # (batch_size, 128, 128, 64)

down2 = downsample(128, 4)(down1) # (batch_size, 64, 64, 128)

down3 = downsample(256, 4)(down2) # (batch_size, 32, 32, 256)

zero_pad1 = tf.keras.layers.ZeroPadding2D()(down3) # (batch_size, 34, 34, 256)

conv = tf.keras.layers.Conv2D(512, 4, strides=1,

kernel_initializer=initializer,

use_bias=False)(zero_pad1) # (batch_size, 31, 31, 512)

batchnorm1 = tf.keras.layers.BatchNormalization()(conv)

leaky_relu = tf.keras.layers.LeakyReLU()(batchnorm1)

zero_pad2 = tf.keras.layers.ZeroPadding2D()(leaky_relu) # (batch_size, 33, 33, 512)

last = tf.keras.layers.Conv2D(1, 4, strides=1,

kernel_initializer=initializer)(zero_pad2) # (batch_size, 30, 30, 1)

return tf.keras.Model(inputs=[inp, tar], outputs=last)

Visualize the discriminator model architecture:

discriminator = Discriminator()

tf.keras.utils.plot_model(discriminator, show_shapes=True, dpi=64)

Test the discriminator:

disc_out = discriminator([inp[tf.newaxis, ...], gen_output], training=False)

plt.imshow(disc_out[0, ..., -1], vmin=-20, vmax=20, cmap='RdBu_r')

plt.colorbar()

W0000 00:00:1721359902.046489 50782 gpu_timer.cc:114] Skipping the delay kernel, measurement accuracy will be reduced W0000 00:00:1721359902.047527 50782 gpu_timer.cc:114] Skipping the delay kernel, measurement accuracy will be reduced W0000 00:00:1721359902.048236 50782 gpu_timer.cc:114] Skipping the delay kernel, measurement accuracy will be reduced W0000 00:00:1721359902.048932 50782 gpu_timer.cc:114] Skipping the delay kernel, measurement accuracy will be reduced W0000 00:00:1721359902.049625 50782 gpu_timer.cc:114] Skipping the delay kernel, measurement accuracy will be reduced W0000 00:00:1721359902.050316 50782 gpu_timer.cc:114] Skipping the delay kernel, measurement accuracy will be reduced W0000 00:00:1721359902.050995 50782 gpu_timer.cc:114] Skipping the delay kernel, measurement accuracy will be reduced W0000 00:00:1721359902.051677 50782 gpu_timer.cc:114] Skipping the delay kernel, measurement accuracy will be reduced W0000 00:00:1721359902.052427 50782 gpu_timer.cc:114] Skipping the delay kernel, measurement accuracy will be reduced W0000 00:00:1721359902.053157 50782 gpu_timer.cc:114] Skipping the delay kernel, measurement accuracy will be reduced W0000 00:00:1721359902.053871 50782 gpu_timer.cc:114] Skipping the delay kernel, measurement accuracy will be reduced W0000 00:00:1721359902.054577 50782 gpu_timer.cc:114] Skipping the delay kernel, measurement accuracy will be reduced W0000 00:00:1721359902.055341 50782 gpu_timer.cc:114] Skipping the delay kernel, measurement accuracy will be reduced W0000 00:00:1721359902.087455 50782 gpu_timer.cc:114] Skipping the delay kernel, measurement accuracy will be reduced W0000 00:00:1721359902.089562 50782 gpu_timer.cc:114] Skipping the delay kernel, measurement accuracy will be reduced W0000 00:00:1721359902.091668 50782 gpu_timer.cc:114] Skipping the delay kernel, measurement accuracy will be reduced W0000 00:00:1721359902.093934 50782 gpu_timer.cc:114] Skipping the delay kernel, measurement accuracy will be reduced W0000 00:00:1721359902.095465 50782 gpu_timer.cc:114] Skipping the delay kernel, measurement accuracy will be reduced W0000 00:00:1721359902.097113 50782 gpu_timer.cc:114] Skipping the delay kernel, measurement accuracy will be reduced W0000 00:00:1721359902.098793 50782 gpu_timer.cc:114] Skipping the delay kernel, measurement accuracy will be reduced W0000 00:00:1721359902.100420 50782 gpu_timer.cc:114] Skipping the delay kernel, measurement accuracy will be reduced W0000 00:00:1721359902.101837 50782 gpu_timer.cc:114] Skipping the delay kernel, measurement accuracy will be reduced W0000 00:00:1721359902.103220 50782 gpu_timer.cc:114] Skipping the delay kernel, measurement accuracy will be reduced W0000 00:00:1721359902.104632 50782 gpu_timer.cc:114] Skipping the delay kernel, measurement accuracy will be reduced W0000 00:00:1721359902.106174 50782 gpu_timer.cc:114] Skipping the delay kernel, measurement accuracy will be reduced W0000 00:00:1721359902.107714 50782 gpu_timer.cc:114] Skipping the delay kernel, measurement accuracy will be reduced W0000 00:00:1721359902.109582 50782 gpu_timer.cc:114] Skipping the delay kernel, measurement accuracy will be reduced W0000 00:00:1721359902.110908 50782 gpu_timer.cc:114] Skipping the delay kernel, measurement accuracy will be reduced W0000 00:00:1721359902.151796 50782 gpu_timer.cc:114] Skipping the delay kernel, measurement accuracy will be reduced W0000 00:00:1721359902.174247 50782 gpu_timer.cc:114] Skipping the delay kernel, measurement accuracy will be reduced W0000 00:00:1721359902.175694 50782 gpu_timer.cc:114] Skipping the delay kernel, measurement accuracy will be reduced W0000 00:00:1721359902.177195 50782 gpu_timer.cc:114] Skipping the delay kernel, measurement accuracy will be reduced W0000 00:00:1721359902.178952 50782 gpu_timer.cc:114] Skipping the delay kernel, measurement accuracy will be reduced W0000 00:00:1721359902.181181 50782 gpu_timer.cc:114] Skipping the delay kernel, measurement accuracy will be reduced W0000 00:00:1721359902.183272 50782 gpu_timer.cc:114] Skipping the delay kernel, measurement accuracy will be reduced W0000 00:00:1721359902.185100 50782 gpu_timer.cc:114] Skipping the delay kernel, measurement accuracy will be reduced W0000 00:00:1721359902.186942 50782 gpu_timer.cc:114] Skipping the delay kernel, measurement accuracy will be reduced W0000 00:00:1721359902.188822 50782 gpu_timer.cc:114] Skipping the delay kernel, measurement accuracy will be reduced W0000 00:00:1721359902.190908 50782 gpu_timer.cc:114] Skipping the delay kernel, measurement accuracy will be reduced W0000 00:00:1721359902.193008 50782 gpu_timer.cc:114] Skipping the delay kernel, measurement accuracy will be reduced W0000 00:00:1721359902.194966 50782 gpu_timer.cc:114] Skipping the delay kernel, measurement accuracy will be reduced W0000 00:00:1721359902.196077 50782 gpu_timer.cc:114] Skipping the delay kernel, measurement accuracy will be reduced <matplotlib.colorbar.Colorbar at 0x7f802c780310>

Define the discriminator loss

- The

discriminator_lossfunction takes 2 inputs: real images and generated images. real_lossis a sigmoid cross-entropy loss of the real images and an array of ones(since these are the real images).generated_lossis a sigmoid cross-entropy loss of the generated images and an array of zeros (since these are the fake images).- The

total_lossis the sum ofreal_lossandgenerated_loss.

def discriminator_loss(disc_real_output, disc_generated_output):

real_loss = loss_object(tf.ones_like(disc_real_output), disc_real_output)

generated_loss = loss_object(tf.zeros_like(disc_generated_output), disc_generated_output)

total_disc_loss = real_loss + generated_loss

return total_disc_loss

The training procedure for the discriminator is shown below.

To learn more about the architecture and the hyperparameters you can refer to the pix2pix paper.

Define the optimizers and a checkpoint-saver

generator_optimizer = tf.keras.optimizers.Adam(2e-4, beta_1=0.5)

discriminator_optimizer = tf.keras.optimizers.Adam(2e-4, beta_1=0.5)

checkpoint_dir = './training_checkpoints'

checkpoint_prefix = os.path.join(checkpoint_dir, "ckpt")

checkpoint = tf.train.Checkpoint(generator_optimizer=generator_optimizer,

discriminator_optimizer=discriminator_optimizer,

generator=generator,

discriminator=discriminator)

Generate images

Write a function to plot some images during training.

- Pass images from the test set to the generator.

- The generator will then translate the input image into the output.

- The last step is to plot the predictions and voila!

def generate_images(model, test_input, tar):

prediction = model(test_input, training=True)

plt.figure(figsize=(15, 15))

display_list = [test_input[0], tar[0], prediction[0]]

title = ['Input Image', 'Ground Truth', 'Predicted Image']

for i in range(3):

plt.subplot(1, 3, i+1)

plt.title(title[i])

# Getting the pixel values in the [0, 1] range to plot.

plt.imshow(display_list[i] * 0.5 + 0.5)

plt.axis('off')

plt.show()

Test the function:

for example_input, example_target in test_dataset.take(1):

generate_images(generator, example_input, example_target)

Training

- For each example input generates an output.

- The discriminator receives the

input_imageand the generated image as the first input. The second input is theinput_imageand thetarget_image. - Next, calculate the generator and the discriminator loss.

- Then, calculate the gradients of loss with respect to both the generator and the discriminator variables(inputs) and apply those to the optimizer.

- Finally, log the losses to TensorBoard.

log_dir="logs/"

summary_writer = tf.summary.create_file_writer(

log_dir + "fit/" + datetime.datetime.now().strftime("%Y%m%d-%H%M%S"))

@tf.function

def train_step(input_image, target, step):

with tf.GradientTape() as gen_tape, tf.GradientTape() as disc_tape:

gen_output = generator(input_image, training=True)

disc_real_output = discriminator([input_image, target], training=True)

disc_generated_output = discriminator([input_image, gen_output], training=True)

gen_total_loss, gen_gan_loss, gen_l1_loss = generator_loss(disc_generated_output, gen_output, target)

disc_loss = discriminator_loss(disc_real_output, disc_generated_output)

generator_gradients = gen_tape.gradient(gen_total_loss,

generator.trainable_variables)

discriminator_gradients = disc_tape.gradient(disc_loss,

discriminator.trainable_variables)

generator_optimizer.apply_gradients(zip(generator_gradients,

generator.trainable_variables))

discriminator_optimizer.apply_gradients(zip(discriminator_gradients,

discriminator.trainable_variables))

with summary_writer.as_default():

tf.summary.scalar('gen_total_loss', gen_total_loss, step=step//1000)

tf.summary.scalar('gen_gan_loss', gen_gan_loss, step=step//1000)

tf.summary.scalar('gen_l1_loss', gen_l1_loss, step=step//1000)

tf.summary.scalar('disc_loss', disc_loss, step=step//1000)

The actual training loop. Since this tutorial can run of more than one dataset, and the datasets vary greatly in size the training loop is setup to work in steps instead of epochs.

- Iterates over the number of steps.

- Every 10 steps print a dot (

.). - Every 1k steps: clear the display and run

generate_imagesto show the progress. - Every 5k steps: save a checkpoint.

def fit(train_ds, test_ds, steps):

example_input, example_target = next(iter(test_ds.take(1)))

start = time.time()

for step, (input_image, target) in train_ds.repeat().take(steps).enumerate():

if (step) % 1000 == 0:

display.clear_output(wait=True)

if step != 0:

print(f'Time taken for 1000 steps: {time.time()-start:.2f} sec\n')

start = time.time()

generate_images(generator, example_input, example_target)

print(f"Step: {step//1000}k")

train_step(input_image, target, step)

# Training step

if (step+1) % 10 == 0:

print('.', end='', flush=True)

# Save (checkpoint) the model every 5k steps

if (step + 1) % 5000 == 0:

checkpoint.save(file_prefix=checkpoint_prefix)

This training loop saves logs that you can view in TensorBoard to monitor the training progress.

If you work on a local machine, you would launch a separate TensorBoard process. When working in a notebook, launch the viewer before starting the training to monitor with TensorBoard.

Launch the TensorBoard viewer (Sorry, this doesn't display on tensorflow.org):

%load_ext tensorboard

%tensorboard --logdir {log_dir}

You can view the results of a previous run of this notebook on TensorBoard.dev.

Finally, run the training loop:

fit(train_dataset, test_dataset, steps=40000)

Time taken for 1000 steps: 115.33 sec

Step: 39k ....................................................................................................

Interpreting the logs is more subtle when training a GAN (or a cGAN like pix2pix) compared to a simple classification or regression model. Things to look for:

- Check that neither the generator nor the discriminator model has "won". If either the

gen_gan_lossor thedisc_lossgets very low, it's an indicator that this model is dominating the other, and you are not successfully training the combined model. - The value

log(2) = 0.69is a good reference point for these losses, as it indicates a perplexity of 2 - the discriminator is, on average, equally uncertain about the two options. - For the

disc_loss, a value below0.69means the discriminator is doing better than random on the combined set of real and generated images. - For the

gen_gan_loss, a value below0.69means the generator is doing better than random at fooling the discriminator. - As training progresses, the

gen_l1_lossshould go down.

Restore the latest checkpoint and test the network

ls {checkpoint_dir}

checkpoint ckpt-5.data-00000-of-00001 ckpt-1.data-00000-of-00001 ckpt-5.index ckpt-1.index ckpt-6.data-00000-of-00001 ckpt-2.data-00000-of-00001 ckpt-6.index ckpt-2.index ckpt-7.data-00000-of-00001 ckpt-3.data-00000-of-00001 ckpt-7.index ckpt-3.index ckpt-8.data-00000-of-00001 ckpt-4.data-00000-of-00001 ckpt-8.index ckpt-4.index

# Restoring the latest checkpoint in checkpoint_dir

checkpoint.restore(tf.train.latest_checkpoint(checkpoint_dir))

<tensorflow.python.checkpoint.checkpoint.CheckpointLoadStatus at 0x7f7ef00ef8e0>

Generate some images using the test set

# Run the trained model on a few examples from the test set

for inp, tar in test_dataset.take(5):

generate_images(generator, inp, tar)